In today’s fast-evolving AI landscape, questions around transparency, safety, and ethical use of AI models are growing louder. One particularly puzzling question stands out: Why do some AI models hide information from users?

Building trust, maintaining compliance, and producing responsible innovation all depend on an understanding of this dynamic, which is not merely academic for an AI solutions or product engineering company. Using in-depth research, professional experiences, and the practical difficulties of large-scale AI deployment, this article will examine the causes of this behavior.

Understanding AI’s Hidden Layers

AI is an effective instrument. It can help with decision-making, task automation, content creation, and even conversation replication. However, enormous power also carries a great deal of responsibility.

The obligation at times includes intentionally hiding or denying users access to information.

Actual Data The Information Control of AI

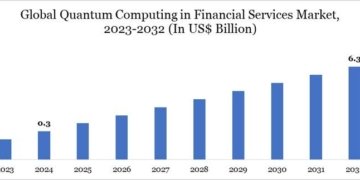

Let’s look into the figures:

Over 4.2 million requests were declined by GPT-based models for breaking safety rules, such as requests involving violence, hate speech, or self-harm, according to OpenAI’s 2023 Transparency Report.

Concerns about “over-blocking” and its effect on user experience were raised by a Stanford study on large language models (LLMs), which found that more than 12% of filtered queries were not intrinsically harmful but were rather collected by overly aggressive filters.

Nearly 30 incidents reported in 2022 alone involved AI models accidentally sharing or exposing private, sensitive, or confidential content, according to research from the AI Incident Database. This resulted stronger platform security.

By 2026, 60% of companies using generative AI will have real-time content moderation layers in place, according to a Gartner report from 2024.

Why Does AI Hide Information?

At its core, the goal of any AI model-especially large language models (LLMs)-is to assist, inform, and solve problems. But that doesn’t always mean full transparency.

Safety Comes First

AI models are trained on vast datasets, including information from books, websites, forums, and more. This training data can contain harmful, misleading, or outright dangerous content.

So AI models are designed to:

Avoid sharing dangerous information like how to build weapons or commit crimes.

Reject offensive content, including hate speech or harassment.

Protect privacy by refusing to share personal or sensitive data.

Comply with ethical standards, avoiding controversial or harmful topics.

As an AI product engineering company, we often embed guardrails-automatic filters and safety protocols-into AI systems. These aren’t arbitrary; they’re required to prevent misuse and meet regulatory compliance.

Expert Insight: In projects where we developed NLP models for legal tech, we had to implement multi-tiered moderation systems that auto-redacted sensitive terms-this is not over-caution; it’s compliance in action.

Legal and Regulatory Requirements

In AI, compliance is not optional. Companies building and deploying AI must align with local and international laws, including

GDPR and CCPA-privacy regulations requiring data protection.

COPPA-Preventing AI from sharing adult content with children.

HIPAA-Safeguarding health data in medical applications.

These legal boundaries shape how much an AI model can reveal.

For example, a model trained in healthcare diagnostics cannot disclose medical information unless authorized. This is where AI solutions companies come in-designing systems that comply with complex regulatory environments.

Preventing Exploitation or Gaming of the System

Some users attempt to jailbreak AI models to make them say or do things they shouldn’t.

To counter this:

Models may refuse to answer certain prompts.

Deny requests that seem manipulative.

Mask internal logic to avoid reverse engineering.

As AI becomes more integrated into cybersecurity, finance, and policy applications, hiding certain operational details becomes a security feature, not a bug.

When Hiding Becomes a Problem

While the intentions are usually good, there are side effects.

Over-Filtering Hurts Usability

Many users, including academic researchers, find that AI models

Avoid legitimate topics under the guise of safety.

Respond vaguely, creating unproductive interactions.

Fail to explain “why” an answer is withheld.

For educators or policymakers relying on AI for insight, this lack of transparency can create friction and reduce trust in the technology.

Industry Observation: In an AI-driven content analysis project for an edtech firm, over-filtering prevented the model from discussing important historical events.

We had to fine-tune it carefully to balance educational value and safety.

Hidden Bias and Ethical Challenges

If an AI model refuses to respond to a certain type of question consistently, users may begin to suspect:

Bias in training data

Censorship:

Opaque decision-making

This fuels skepticism about how the model is built, trained, and governed. For AI solutions companies, this is where transparent communication and explainable AI (XAI) become crucial.

A Smarter, Safer, Transparent AI Future

So, how can we make AI more transparent while keeping users safe?

Better Explainability and User Feedback

Models should not just say, “I can’t answer that.”

They should explain why, with context.

For instance:

“This question may involve sensitive information related to personal identity. To protect user privacy, I’ve been trained to avoid this topic.”

This builds trust and makes AI systems feel more cooperative rather than authoritarian.

Fine-Grained Content Moderation

Instead of blanket bans, modern models use multi-level safety filters. Some emerging techniques include:

SOFAI multi-agent architecture: Where different AI components manage safety, reasoning, and user intent independently.

Adaptive filtering: That considers user role (researcher vs. child) and intent.

Deliberate reasoning engines: They use ethical frameworks to decide what can be shared.

As an AI product engineering company, incorporating these layers is vital in product design-especially in domains like finance, defense, or education.

Transparency in Model Training and Deployment

AI developers and companies must communicate.

What data was used for training

What filtering rules exist

What users can (and cannot) expect

Transparency helps policymakers, educators, and researchers feel confident using AI tools in meaningful ways.

Distributed Systems and Model Design

Recent work, like DeepSeek’s efficiency breakthrough, shows how rethinking distributed systems for AI can improve not just speed but transparency.

DeepSeek used Mixture-of-Experts (MoE) architectures to reduce unnecessary communication. This also means less noise in the model’s decision-making path-making its logic easier to audit and interpret.

Traditional systems often fail because they try to fit AI workloads into outdated paradigms. Future models should focus on:

Asynchronous communication

Hierarchical attention patterns

Energy-efficient design

These changes improve not just performance but also trustworthiness and reliability, key to information transparency.

So, what does this mean for you?

If you’re in academia, policy, or industry, understanding the “why” behind AI information hiding allows you to:

Ask better questions

Choose the right AI partner

Design ethical systems

Build user trust

As an AI solutions company, we integrate explainability, compliance, and ethical design into every AI project. Whether it’s conversational agents, AI assistants, or complex analytics engines-we help organizations build models that are powerful, compliant, and responsible.

Final Thoughts: Transparency Is Not Optional

In conclusion, AI models hide information for safety, compliance, and security reasons. But transparency, explainability, and ethical engineering are essential to building trust.

Whether you’re building products, crafting policy, or doing research, understanding this behavior can help you make smarter decisions and leverage AI more effectively.

Ready to Build AI You Can Trust?

If you’re a policymaker, researcher, or business leader looking to harness responsible AI, partner with an AI product engineering company that prioritizes transparency, compliance, and performance.

Get in touch with our AI solutions experts, and let’s build smarter, safer AI together.

Transform your ideas into intelligent, compliant AI solutions-today.

Name: CrossML Private Limited

Address: 2101 Abbott Rd #7, Anchorage, Alaska, 99507

Phone Number: +1 415 854 8690

Company Email ID: business@crossml.com

CrossML Private Limited is a leading AI product engineering company delivering scalable AI software development solutions. We offer AI agentic solutions, sentiment analysis, governance, automation, and digital transformation. From legacy modernization to autonomous operations, our expert team builds custom AI tools that drive growth. Hire AI talent with our trusted staffing services.

Let’s turn your AI vision into reality-contact https://www.crossml.com/ today and unlock smarter, faster, and future-ready solutions for your business.

This release was published on openPR.